·

Get 3 extra months

Black Friday deal: 74% off NordVPN

Don't miss the biggest cybersecurity sale of the year.

Secure up to 10 devices and enjoy ultra-fast speeds.

Avoid downloading malware with Threat Protection Pro™.

What is a VPN?

VPN stands for “virtual private network.” A VPN service gives you safe and private access to the internet. By encrypting your connection, a VPN changes your IP address and virtual location while protecting your online activity from spying eyes and keeping your data safer from cybercriminals.

VPN stands for “virtual private network.” A VPN service gives you safe and private access to the internet. By encrypting your connection, a VPN changes your IP address and virtual location while protecting your online activity from spying eyes and keeping your data safer from cybercriminals.

Why NordVPN?

Thanks to all kinds of nifty, cutting-edge technology, NordVPN keeps your devices malware free and your online activities safe from strangers’ eyes. How safe? It would take the world’s most powerful computer billions of years to unencrypt your data — that safe.

We know you have emails to send, games to beat, and videos to watch, so NordVPN will ensure the best VPN connection speeds possible. You get unlimited data, thousands of VPN servers worldwide, and modern VPN protocols working quickly to deliver you the best internet experience without interruptions.

With NordVPN, you can browse like no one’s watching — because no one is. We don’t track what you do online (and have undergone audits to prove it.) And if you want extra privacy features, you can instantly double your protection by connecting to Double VPN servers — it will encrypt your data and change your IP twice.

Available for all your devices

Use a VPN on up to 10 devices and enjoy a secure and private access to the internet — even on public Wi-Fi.

Sit back, relax, and browse in confidence

Malware Protection

A safer, better, easier digital life — Threat Protection Pro™ will block malware before it downloads.

Remote file access

All your devices are just a click away. Wherever you go, connect to them safely with Meshnet.

Dark Web Monitor

A VPN service that protects your accounts: NordVPN sends instant alerts if your email address is leaked.

Take your online security to the next level

Safe browsing

Enjoy an effortless experience every time you go online: avoid downloading malware and browse safe from snoopers, trackers, and ads.

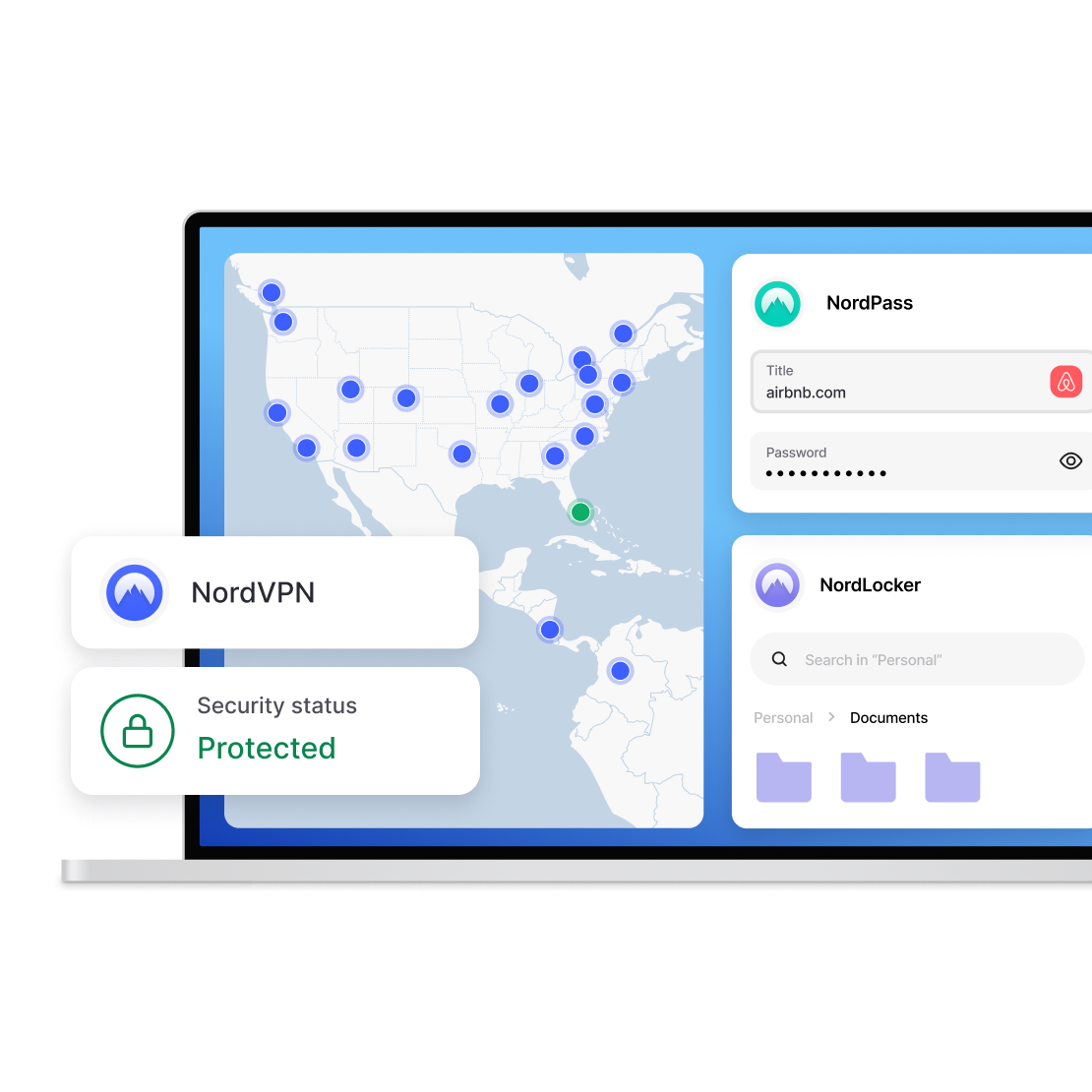

Password security

Add a premium password manager to the mix: it will generate, auto-fill, and store your passwords for you. No more endless resets!

Encrypted files

Go all out and have your files encrypted, backed up, and always within reach in a secure cloud. Share securely, sync effortlessly, and rest easy.

A one-stop shop for safe browsing, passwords, and files

Pick the best plan for your needs and rest easy knowing all aspects of your digital life are protected at all times.

Trusted by tech experts and users

Is NordVPN the best VPN service for online privacy? Let’s ask our users and tech experts.

As VPN services go, it's hard to beat NordVPN. It has a large and diverse collection of servers, an excellent collection of advanced features, strong privacy and security practices, and approachable clients for every major platform.

Max Eddy

Software analyst, PCMag

The bottom line here is: When you’re online, you don’t have to worry about being secure or about your information getting out there if you have a VPN. NordVPN makes it simple.

Tech of Tomorrow

Tech reviewer, YouTube

Black Friday: Don't miss the biggest VPN sale of the year

74% discount + 3 months extra

30-day money-back guarantee

Take a look at our promotion’s terms and conditions.

Black Friday deal

74% off + 3 months extra

30-day money-back guarantee

Take a look at our promotion’s terms and conditions.