How we automated our content translation QA tests

One of the most significant challenges our QA engineers used to face was the daily testing of multiple pages across several languages. Publicly accessible websites change frequently, and verifying all of those changes across all of those pages and all of those languages took a lot of work.

This post is about how we automated those processes to ensure quality without sacrificing speed or flexibility. As a lead QA automation engineer, I am responsible for automating tests on every aspect of Nord Security’s three main products. This includes designing test libraries and unified framework architecture capable of covering both user interface and backend API regression testing.

The challenge

Nord Security’s web domains contain thousands of pages in many different languages. The scope of verifying every update to these pages is simply too large for QA engineers to perform manually. It involves using dictionary tools to evaluate whether labels make sense in the correct languages on every impacted page. We needed a more sophisticated solution to handle this problem.

The main challenge was to reduce human effort and labor by as much as possible, so we decided to try to automate as many content testing steps as we could.

First, we had to figure out how to test labels in different languages. It would be nearly impossible to follow-up all label changes in all supported languages and we have limited test maintenance resources, so hard-coding text values would not have been a good solution. This tool had to be able to evaluate all supported languages on its own.

The second problem was the ability to handle any given page and subpage. It would be nearly impossible to update the testing tool each time a new page or subpage is released, so the tool had to be capable of adapting to new pages instantly.

Third, we had to find a way to extract all text labels from any site. That means we cannot rely on specific element selectors – they have to be universal. This is because of how our website is presented to the customer. Some page content can only be seen from specific countries’ IP addresses, so we needed a way to iterate by IP address.

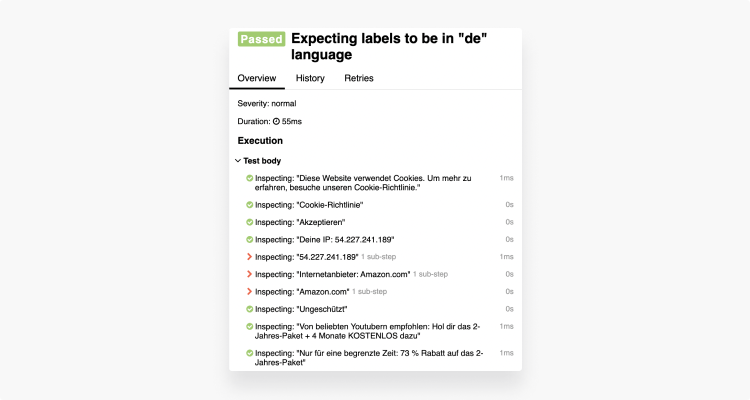

In the end, human interaction is still required to finalize the test result evaluation. Therefore, we also needed a sophisticated reporting tool to display the results. The clearer and simpler they were presented, the more it would reduce human effort.

The solution

Once we listed our requirements, we understood that regular solutions would not cut it. After careful consideration, a lengthy investigation, and plenty of trial and error experiments, we implemented an AI module that met our quality demands for this problem. We chose a well-trained AI module with Selenium-based libraries.

The AI module helps us determine the text language (to detect language mismatches) and lets us automatically inspect whether translations are in place or not. There was no need to hardcode every label in every language on every page as the AI works just fine regardless of label count, language or page. It also requires very little maintenance.

To solve the IP localization challenge, we decided to use a Docker virtualization machine to build isolated virtual environment images – containers. These containers had to have all the required test files and dependencies and be capable of establishing VPN tunnel connections regardless of who or what is running the image and where they are. They also needed to export and store test artefacts – reports with results, screenshots and any bugs that have been found – for evaluation, further investigation and archiving on the host machine or CI tool.

After several attempts to display the test results, we found that HTML “Allure” reports are the most convenient way for the viewer to finalise test results, so this library was also included in the virtual machine container.

The result

Almost all of our content testing now relies on this solution. It helps us meet deadlines by significantly reducing the human effort required to test new translations. Moreover, it helped us find several bugs in our language implementation that would have been very hard to find manually. Because the testrun is fully automated, it no longer matters whether there is one or several new languages or how many new pages are coming up. We no longer need to review all the changes individually. This tool reports any mismatches it finds and the QA engineer only needs to review suspicious labels and make a final decision. The human effort required has been reduced significantly.

What does the future hold?

We still have several new challenges to solve. Some of those include visual inspections of site structure, text, font size, overlays and overall customer experience issues. These are currently being tested manually, but after the huge success of the content language inspection tool, we have been working towards automating even more key points. Broader use of AI-based modules may be the key to ensuring the quality of our website.

Want to read more like this?

Get the latest news and tips from NordVPN.